Archant and Google Bring 160 Years of History to Life with ubisend

Product used: fully custom chatbot.

Founded in 1845, Archant is a privately-owned UK media group. Archant has been reporting the local, Norfolk (and surrounding region) news over 160 years in various newspapers and magazines. In 2020, the group publishes four daily newspapers, over 50 weekly newspapers, and over 80 consumers magazines.

As the company continues to innovate with new media technology, they wanted to explore the emerging chatbot market. In 2017, digital development director Lorna Willis reached out to ubisend with a unique project in mind.

What if we could turn Archant's extensive newspaper archives, millions upon millions of articles on paper, into an interactive, voice-activated chatbot?

The opportunity (and challenge) was too good for ubisend to pass on. Archant and ubisend were soon joined by Google to create a first of its kind; a truly ingenious product in an industry desperate for innovation.

Seeking an innovation specialist

Archant's journey with ubisend began when digital development director Lorna Willis stumbled upon some of our online content. After reading a few pieces, she reached out to our team to throw a tentative idea in the air.

Could we, hypothetically, scan 160 years-worth of old newspapers, extract their content into a digital, processable format, and use this (enormous) database of stories as a hub of local knowledge for an AI-driven voice chatbot?

Lorna's vision and enthusiasm were palpable; and contagious. The teams met a few times to explore the idea further and, with every meeting, the project took form.

"Because of where we are now, it's hard to recall how much of an unknown this project was back then. Almost every single part of Lorna's idea had never been done before", recalls Alex Debecker, ubisend's CMO and project manager on Local Recall.

Turning a liability into an asset and creating new revenue streams

To Archant, the project wasn't just about innovation. Though the idea of creating a first-of-its-kind was certainly appealing, the main motivation behind this project was two-fold:

Turn a liability into an asset. Archant's paper archives are old (160+ years). Time has taken its toll on some of the oldest newspapers. Not only are they at risk of getting lost, but they are also becoming more and more expensive to store and keep in reasonable condition. Digitising them and turning them into a revenue-generating asset would be a full 180.

Generating new revenue streams. The traditional newspaper business is no longer enough for a media company. The group has consistently explored new avenues, including marketing services, TV production, events, and more. With this project, Archant could explore voice and conversational software as a new revenue-generating channel.

Local Recall was born.

Securing Google funding

In 2017, Google was running a European funding programme called the Digital News Initiative. This programme helped journalism thrive in the digital age by funding various innovative projects.

The Local Recall project had no shortage of innovation and, thus, Archant and ubisend partnered up to apply for funding from Google's DNI fund. The project inspired Google enough to be awarded one of their largest fundings for the year: almost one million euros.

With this funding secured, the dream could be set aside and make way for something even more exciting: two years of problem solving, technological innovation, and teamwork to bring Local Recall to life.

Turning old newspapers into an AI-driven brain

So, what is it we are doing?

The premise is simple. Archant has hundreds of thousands of pages of content in analogue format. We need to turn it all into a real-time text and voice conversation.

Our first goal was for a user to be able to ask things like:

What happened today in 1934?

What was the headline news on the 4th of January 1899?

This would return the news that happened on these particular dates in a natural, conversational, way.

Eventually, the goal is to build a product with such extensive knowledge of local news that it could answer questions like:

Tell me the headlines on the Queen’s coronation.

When was the last time Norwich Football Club won a game 6-0?

What else happened on the day the Second World War was declared?

To resurface the right content, we normalise, label, and store an enormous amount of data gathered from Archant’s archives.

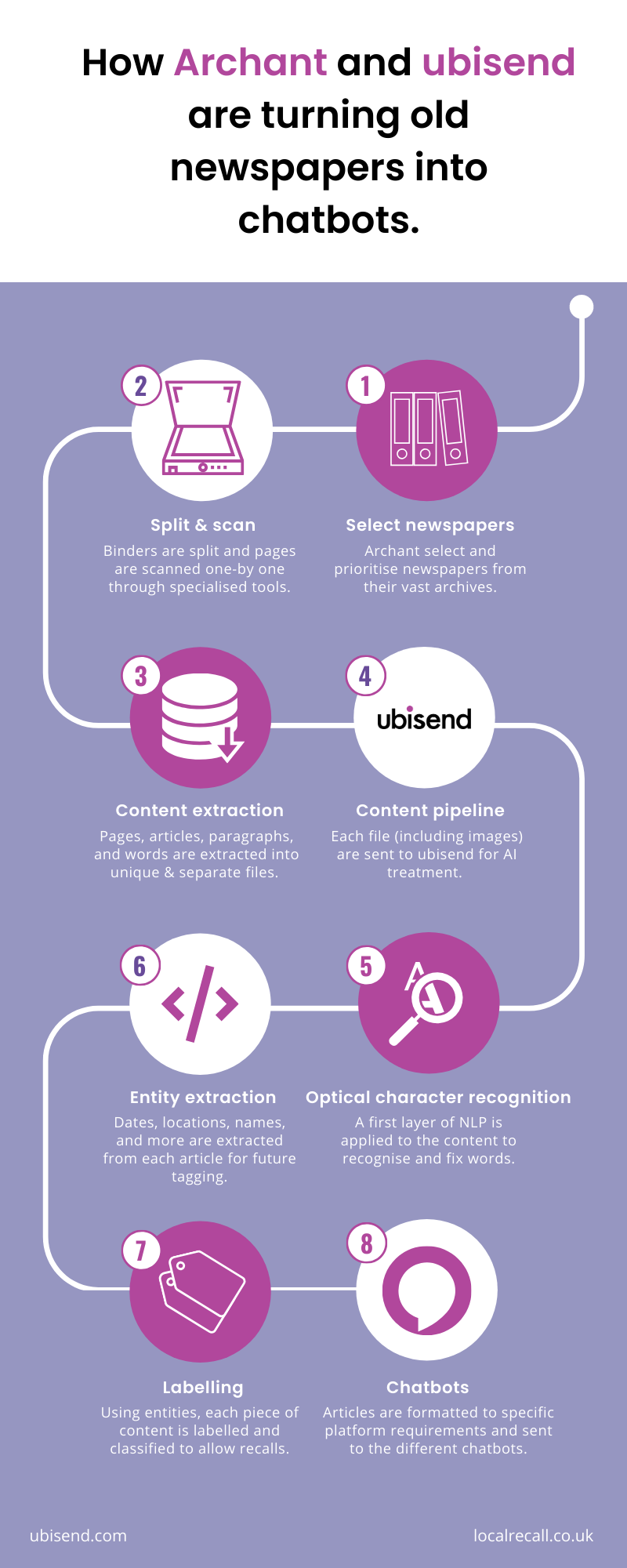

To conceptualise the project, here is a high-level overview of the process ubisend put in place to transform physical, old newspapers into an artificial intelligence software.

The development team at ubisend put this structural plan on paper at the start of the project in 2018. With this in hand, we started our journey to making it happen.

Braving unique technical challenges

Over the course of the project, we faced quite a few challenges. Some were expected. Others came as a bit of a surprise.

As a business, we pride ourselves in our ability to creatively problem-solve with technology. So we did just that. Here are five examples that show both the challenges we faced and our approach to solving them with advanced, bespoke AI software.

1. Dealing with big data

The scale of the Local Recall project is hard to comprehend. We can start with some basic numbers.

There are over 160 years worth of newspaper archives. These represent approximately 700,000 newspaper pages.

In the old days, newspaper pages were significantly bigger than today. Per page, there is an average of 20 articles.

This initial batch of content therefore mounts up to approximately 14,000,000 articles.

14 million articles is already an impressive figure but it doesn't stop there. This only represents the archives of one of Archant's newspapers (remember, they own four daily newspapers, 50 weekly newspapers, and 80 magazines).

This number also only accounts for paper archives. From 1990-onwards, Archant started publishing online. Between 1990 and 2000, Archant released 12 million online articles.

Is your head spinning yet? Well, hold on to your hats.

Each article is filled with entities (places, people, dates, etc.) that our systems must recognise, extract, and classify. This process is what eventually allows Local Recall users to use natural language to recall articles (e.g. 'What happened in Norwich on June 14, 1983?').

Assuming the average article contains 200 words, we are firmly entering the world of big data. We stopped counting at approximately 4 billion words stored from the archived newspapers.

2. Scaling the project's architecture

The pipeline of articles going from paper to scan, to finally being extracted into workable data, is extremely complex. One of the many intricacies of the content pipeline we built is dealing with images.

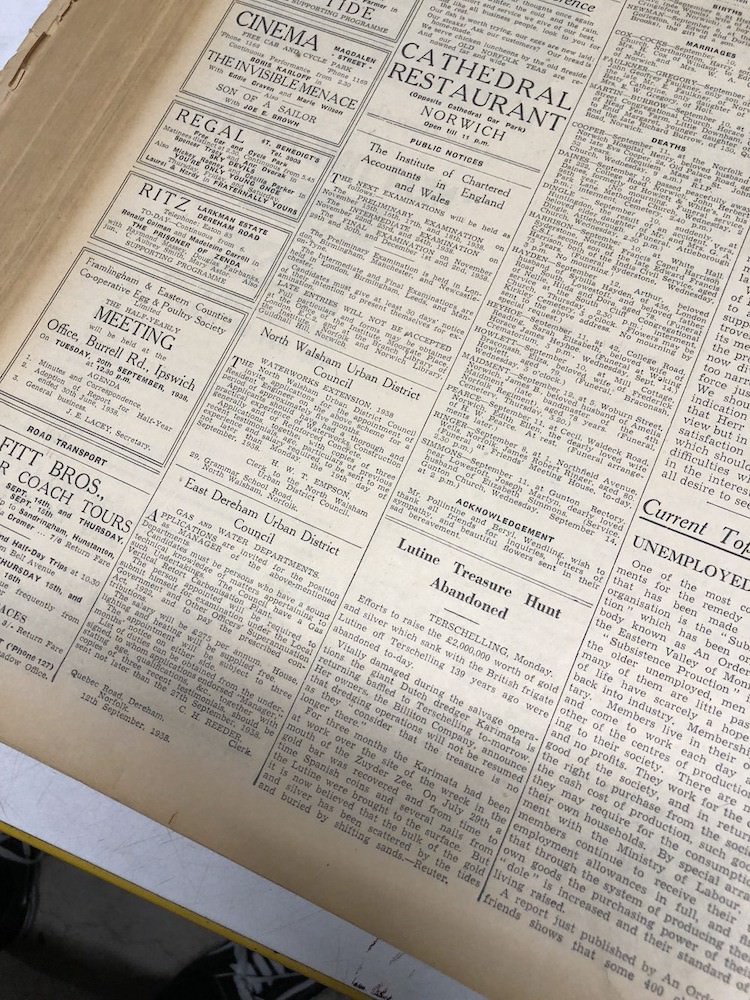

Old newspapers are cool to look at. I mean, just look at this:

So cool!

In the process of digitising the content, we didn't want to lose this unique view of the past. So, as well as scanning and extracting words, we keep high-quality scans of every newspaper page.

Each article recalled is tied to its original scan. Where the device allows it, users can preview the page and get a feel for the original experience while accessing the content via the latest technology.

Digital copies must be high-quality. If not, they quickly become illegible. This means each page is stored in a JP2 format, at approximately 30MB. Carried over 700,000 pages (for now), that's a database of 21TB just for the scans. Each scan must be swiftly accessible as and when a user recalls an article.

DevOps and database infrastructure have been an interesting challenge to solve! One of our developers dove into the topic in a recent talk (warning: this does get into the weeds!):

3. Bringing voice into the browser

The ultimate goal of Local Recall is to make Archant's content available. It's much more than merely storing digital copies of the newspapers before they disintegrate. Availability is key.

The project initially focussed on making the content accessible via an Alexa chatbot. But we didn't stop there.

A significant audience segment for Local Recall is expected to be the older generation. These individuals would use Local Recall to re-live stories from their past. Unfortunately, the same segment is unlikely to have an Alexa at home.

We still wanted to deliver a voice experience. So, we made our chatbot available straight from the browser. All they have to do is enable their microphone in the tab, and off they go!

This presented a series of new challenges. From encoding the audio from the users in real time, to converting the frequencies to make the process compatible across devices, to finally parse the content to our algorithms and send a response back; the whole process was new, complex, and exciting.

Our lead developer on this part of the project, Matthew Williams, wrote an excellent (but very technical) piece on this particular challenge: Recording audio with React for Amazon Lex.

Later, we also built text and Google Assistant versions of the same experience.

4. Entity extraction at huge scale

Entities are the words such as locations, persons, and events present in the text. For Local Recall to work, we need to locate, extract, and label these entities throughout the entire database of content. This process is what allows us to return a relevant article to a user.

For example, if a user asks 'read me an article about the Queen in Norwich in 1956', we need to return an article that has a combination of 'Queen' (person), 'Norwich' (location), and '1956' (date).

Now, as complex as this may sound, this is actually pretty standard for us. Entity recognition is part of all our chatbots' inner working.

What made this particular project more complex was the hyper-localisation of some of the entities we had to work with. Because Local Recall is a project based on a very local part of the UK (Norfolk), the entities needed to match that hyper-locality.

Archant picked 100 hyper-local entities the chatbot had to be able to recognise. Because they are so specific, we had to manually train our models initially using 1,000 articles.

This phase of the project allowed us to kill two birds with one stone.

Entity training. We could teach our algorithms to recognise new words like Wroxham as a city, not a made-up word.

Context. We could teach our algorithms to make the difference between entities based on the context of the article.

For example, the 'Queen' doesn't have the same meaning between 'Queen Elizabeth arrived at 2PM' and 'Roadwork on Queen's road are due to continue'.

5. Adapting to different formats

By now, you may have noticed something somewhat interesting: this project deals with lots of content formats.

The content is accessible across:

- Website text bot

- Website voice bot

- Website search tool

- Alexa

- Google Assistant

- Siri

Each of these requires different formatting and data mark up. But that's not all.

We also take content from different data sources:

- Content scanning company Findmypast

- Content scanning company Townsweb Archiving

- Web historical content (bulk import)

- Web instant content (API)

Again, each of these has a different format and data mark up.

One of the biggest challenges our team had to face was to unify all the data into one cohesive and scalable schema; both input and output. Not only does this allow the project to run smoothly; it also future-proofs our processes for an eventual new stream of data (e.g. a Facebook voice-activated device).

Big wins and proudest moments

It has been a long and winding road. Along the way, we've encountered many challenges (some of which are above). We've also some incredible team wins.

Here are a few of them.

1. Getting the go-ahead from no other than Google

Not to get too sappy here, but how cool is it to be working with Google? How amazing is it to put together a project that is so innovative Google themselves thought it warranted a million euros just to give it a try?

2. Adapting to Google Assistant

When the project first came about in early 2018, Alexa was all the rage. They were miles ahead of any competition. It made sense to build the project with Alexa in mind.

As 2018 progressed, though, Google Assistant started to close the gap. By 2019, Google Assistant and Alexa were head-to-head. It was time to course-correct and make Local Recall available through this new channel.

ubisend was one of the first chatbot companies to build a voice chatbot onto the Google Assistant platform. We overhauled our data structure and schema, built the chatbot, and got it approved in record time.

3. Attracting passionate volunteers

Early on in the project, we realised we were going to need passionate individuals to help us out. A lot of the content we were scanning was highly damaged (by fire, water, and time). Scanning it and flinging whatever came out to a computer wasn't always going to work.

We built a crowd-sourced editing software from the ground up, specifically for this project. This software allowed volunteers to see a scan of the original newspaper article and the computer output side by side, and correct the output manually.

This process allowed a few things:

- It sped the project up by delivering quality content into our chatbots.

- It improved our algorithms faster and earlier on than expected, which continues to pay dividends today.

- It created a community of individuals passionate about local history; the very market Local Recall is aiming to target.

Archant, and particularly project manager Chris Amos and archive editor Ben Craske, have done an incredible job raising awareness for the project over the last two years. Today, they have gathered close to 1,000 volunteers helping the project out.

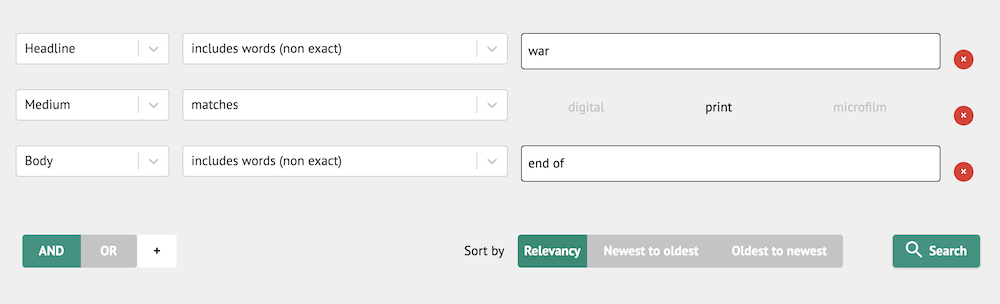

4. A journalist's dream search tool

As Local Recall was making progress digitising content, Archant journalists' ears pricked up. A comprehensive database of all our historical content, you say? Yes, please.

It quickly became evident that journalists could do a lot with this content. To assist them, we built a lightning-fast search tool. This web-based tool allows journalists to enter a few keywords and dates, and instantly return a series of articles that match.

Considering this tool would search through billions of words, millions of articles, and terabytes of images, we had to work extra hard to make sure journalists didn't have to wait 14 days to get their search results back.

We managed to bring the whole process down to a few milliseconds. In an instant, journalists can now access 160 years of content. Success.

This tool was so well received, Archant decided to make it a core public product, allowing all Local Recall users to access it as well!

History lives on

Local Recall gives users a window to the past. It may be their past. It may be their family's past. It may simply be the past of the local, Norfolk area they live in.

Whatever the motivations to access it, historical events and the way they were originally told have things to teach us. Through the latest technologies, these lessons are now available to everyone; from students who discover to people who remember.

To learn more about Local Recall, visit their website.

To learn more about Archant, visit their website.

Learn about our chatbot development services.